Artificially Intelligent, Naturally Blind: Why Smart Machines Still Don’t Get the World

#73: If AI can answer anything, why does it still fail at understanding something as simple as cause and effect?

Our phone can summarize Shakespeare, your fridge can order groceries, and your car might soon drive itself. But here’s a strange question: Do these “intelligent” systems actually understand the world they’re operating in?

In 2025, AI systems can ace standardized tests, generate poetry, and argue about Kantian ethics. But give them a simple real-world scenario — like pouring a glass of juice — and they fall apart. Why? Because they’re missing something fundamental: a model of the world. They’re intelligent, yes, but in the same way a magician is magical — clever sleight of hand, no real depth.

So let’s ask the big one:

Can AI ever be truly intelligent without a world model?

Or are we building glorified parrots with great vocabularies?

The Case for a World Model

Think of a world model as the AI’s internal compass — a mental map of how the world works. Humans use it constantly: If you drop a glass, you don’t need to see it shatter to know it’ll break. You’ve learned causality. You simulate outcomes.

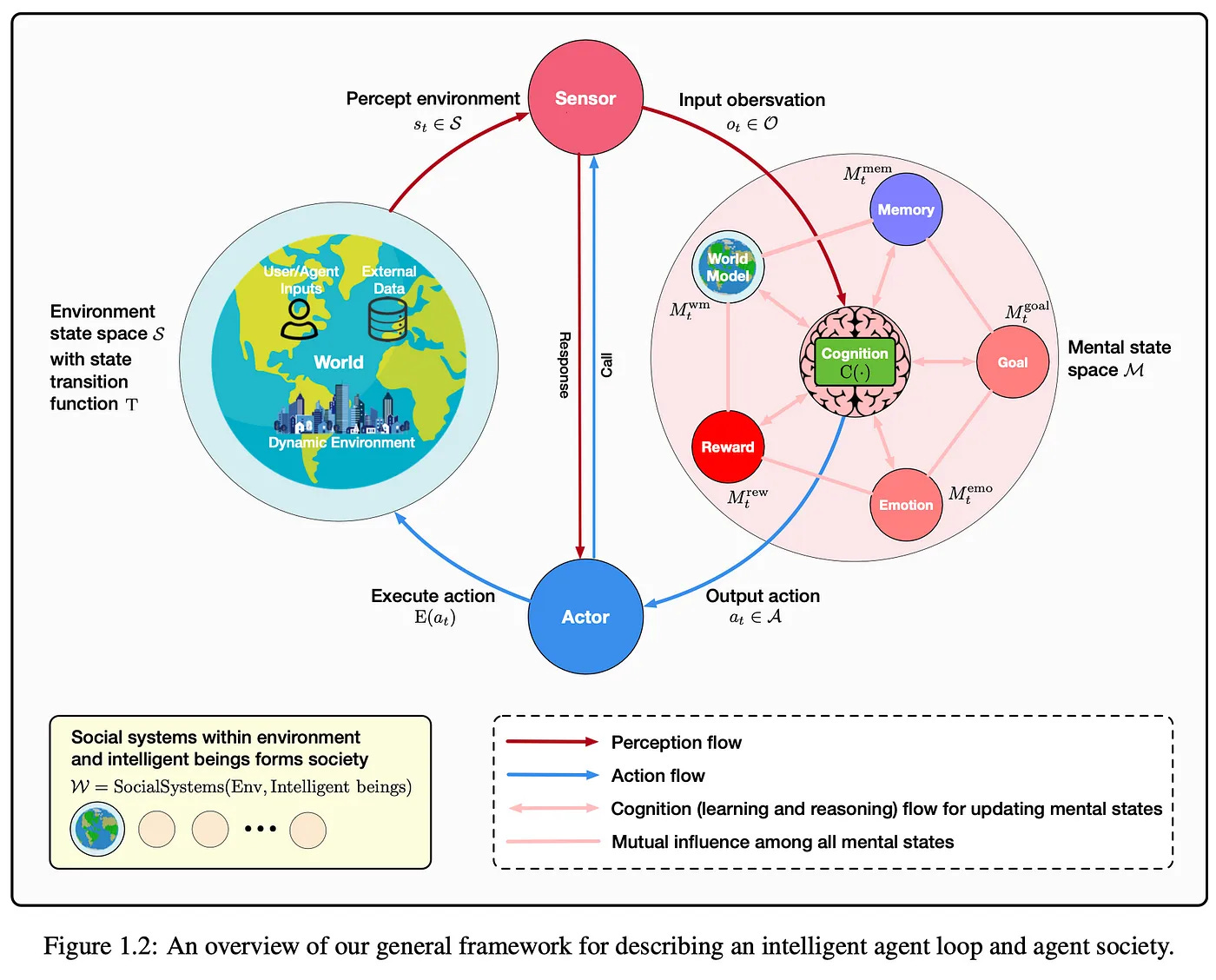

A visual from recent research (Figure 1.2) helps clarify what a world model does within an intelligent agent. On one side, you have the environment — a dynamic world full of user inputs, external data, and state changes. The agent perceives this environment through sensors, feeding observations into its mental state space, which includes memory, goals, emotions, reward signals — and crucially, the world model. At the heart of it all is cognition, where learning and reasoning happen.

From this mental processing center, the agent generates an action, which is executed back into the environment. It’s a loop — perceive, process, act, repeat. What the image emphasizes is that a world model isn’t just a bonus — it’s central. It links past experiences, current observations, and future goals into a coherent picture the agent can act on. Without it, the loop is broken, or at best, blind.

Most systems today are statistical engines that react to inputs based on past data. Ask them what happens if you open an umbrella indoors and they might cite superstitions, but they won’t simulate the physics. That’s because they don’t have a world model — they just have patterns.

Without this internal map, AI is like a GPS with no signal: all the computing power in the world, and still lost in a parking lot.

How Do You Build a World Model, Anyway?

If you want an AI to understand the world, it needs more than just training data. It needs a coherent internal representation — something it can use to predict, reason, and plan.

World models attempt to create a compressed mental state of the current environment and then use that to predict future outcomes. They rely on mechanisms like latent state spaces, which are abstract representations of what the world looks like at any moment, forward models to simulate what will happen next if a certain action is taken, and inverse models that figure out which action is needed to reach a specific outcome. These aren’t just mathematical gimmicks; they echo how humans learn through interaction rather than memorization.

Babies don’t learn physics by reading textbooks; they throw things, fall over, and see what happens. That feedback loop of action and consequence is what builds their model of the world. In AI, we’re attempting the same thing — especially through embodied agents. These are robots or digital characters that learn by acting, whether in the physical world or in rich simulated environments. They poke, prod, and explore their surroundings, gaining insight not through passive absorption but through active engagement.

Why It’s Working Now (Finally)

In the past, world modeling felt like an impossible dream. But in 2025, a combination of breakthroughs is finally making it feasible. One of the biggest enablers has been multimodal learning. AI is no longer confined to text; it can now learn from images, video, audio, and even sensory data, creating a much richer understanding of the environment — more akin to how humans learn.

Simulated environments have also reached new levels of realism. Tools from companies like NVIDIA and DeepMind now let agents explore virtual spaces that mimic real-world physics and complexity, allowing them to build internal models safely and cost-effectively. This shift from static training to interactive simulation is crucial, because it provides the kind of feedback that AI needs to refine its predictions.

At the architectural level, transformers and large neural networks have proven themselves adept not only at memorizing patterns but also at predicting future states. They are able to learn sequences and causal relationships in a way that lends itself well to modeling world dynamics. And perhaps most importantly, AI systems are increasingly trained using self-supervised learning methods. Instead of being spoon-fed labels, they learn by predicting what comes next and correcting themselves — just like humans do during trial and error.

But Here’s the Rub: It Might Not Work

Despite the momentum, building reliable world models is far from guaranteed. One major challenge is the trade-off between generality and specificity. A model that works well across many environments may lack the precision needed for a particular task, while a highly tuned model might struggle to adapt beyond its niche.

Then there’s the infamous “Sim2Real” gap — the disconnect between learning in a simulation and performing in the messy, unpredictable real world. An AI that excels in a virtual kitchen might fail completely when confronted with a real one, because even small inconsistencies in texture, lighting, or behavior can throw it off.

Long-term memory also remains an unsolved problem. Most AI agents today have what you might call goldfish memory — easily distracted, constantly forgetting what just happened. Sustained, context-aware memory is essential for world modeling, and we’re still working on how to engineer that.

Perhaps most concerning is the black-box nature of these systems. Even if an AI has a robust internal model, we often can’t see or understand how it works. This lack of interpretability makes it difficult to trust the system, especially in high-stakes domains like healthcare or autonomous vehicles.

And of course, there’s the ethical elephant in the room: a flawed or biased world model can lead to dangerous decisions. If the AI misunderstands its environment or its goals, the results can be catastrophic.

Who’s Building the Future?

Several major players are racing to crack the code. Google DeepMind now has a team dedicated to building world models that simulate environments for gaming and robotic tasks. NVIDIA’s new Cosmos model family is tailored to help robots and self-driving cars understand their surroundings through 3D video analysis. Other companies, like Meta, OpenAI, and Anthropic, are also experimenting with multi-agent systems, long-term memory modules, and embodied interaction.

In academia, the Foundation Agents project has proposed brain-inspired AI frameworks that integrate modules for memory, perception, reasoning, emotion, and — crucially — world modeling. The goal is to create not just smart machines, but coherent, adaptive agents that learn and evolve in a structured, meaningful way.

The Road Ahead

So where does this lead us?

In the near future, we may see AI assistants that don’t just remember what you told them yesterday but also anticipate your needs based on contextual understanding. We could have “digital twins” of entire cities or even human bodies, letting us run simulations to optimize everything from traffic flow to disease treatment. And on the frontier, AI scientists could begin to form hypotheses, conduct virtual experiments, and refine their world models much like human researchers.

These agents won’t just regurgitate facts — they’ll explore, hypothesize, and adapt. In doing so, they could become powerful partners in everything from scientific discovery to social collaboration.

Understanding Is the New Frontier

We’ve built smart models that can talk, write, and even code. But without a world model, they’re doing it blindfolded. They’re impressive, but not grounded. Useful, but not trustworthy.

If we want AI to move from brilliant mimicry to genuine intelligence — from parrots to partners — we have to give them what humans evolved over millions of years: a working understanding of the world.

Until then, we may have artificial intelligence. But we’ll still be staring into artificial ignorance.

Hit subscribe to get it in your inbox. And if this spoke to you:

➡️ Forward this to a strategy peer who’s feeling the same shift. We’re building a smarter, tech-equipped strategy community—one layer at a time.

Let’s stack it up.

A. Pawlowski | The Strategy Stack

Source:

[1] Bengio, Y., et al. (2025). Advances and Challenges in Foundation Agents: From Brain-Inspired Intelligence to Evolutionary, Collaborative, and Safe Systems. arXiv preprint arXiv:2504.01990. https://arxiv.org/abs/2504.01990

[2] Clark, J., & Amodei, D. (2023). Anthropic’s Claude: Constitutional AI and alignment. Anthropic.

https://www.anthropic.com

[3] DeepMind. (2025, January 7). DeepMind launches world modeling team for gaming and robotics. The Verge. https://www.theverge.com/2025/1/7/24338053/google-deepmind-world-modeling-ai-team-gaming-robot-training

[4] Hassabis, D., et al. (2023). AlphaGo and beyond: The legacy of DeepMind’s planning and reasoning agents. Nature, 601(7891), 193–200. https://doi.org/10.1038/s41586-021-04086-x

[5] Huang, J., & Huang, W. (2024). Multimodal Foundation Models: Trends and Challenges. Proceedings of the IEEE, 112(3), 521–539.

[6] NVIDIA. (2025, February 15). Introducing Cosmos: AI that helps robots and autonomous vehicles understand the world. WIRED. https://www.wired.com/story/nvidia-cosmos-ai-helps-robots-self-driving-cars

[7] Radford, A., et al. (2022). Language models are few-shot learners. In Advances in Neural Information Processing Systems (Vol. 33, pp. 1877–1901). https://arxiv.org/abs/2005.14165

[8] Turing, A. M. (1950). Computing machinery and intelligence. Mind, 59(236), 433–460.

[9] Vaswani, A., et al. (2017). Attention is all you need. In Advances in Neural Information Processing Systems (pp. 5998–6008). https://arxiv.org/abs/1706.03762

[10] Liu, B., Li, X., Zhang, J., Wang, J., He, T., Hong, S., Liu, H., Zhang, S., Song, K., Zhu, K., Cheng, Y., Wang, S., Wang, X., Luo, Y., Jin, H., Zhang, P., Liu, O., Chen, J., Zhang, H., Yu, Z., Shi, H., Li, B., Wu, D., Teng, F., Jia, X., Xu, J., Xiang, J., Lin, Y., Liu, T., Liu, T., Su, Y., Sun, H., Berseth, G., Nie, J., Foster, I., Ward, L., Wu, Q., Gu, Y., Zhuge, M., Tang, X., Wang, H., You, J., Wang, C., Pei, J., Yang, Q., Qi, X., & Wu, C. (2025). Advances and challenges in foundation agents: From brain-inspired intelligence to evolutionary, collaborative, and safe systems (arXiv:2504.01990). arXiv. https://arxiv.org/abs/2504.01990

Excellent article Alex

You have an ability to integrate different threads of technological insight, into a coherent framework.

PS Thanks for your interest.

I have an AI relevant post in preparation that may resonate - if it does I’d be interested in your thoughts)

Grey