The Illusion of AI: Limits, Bottlenecks, and the Path to AGI

#61: Unmasking the Limits of Intelligence in an Age of Hype (9 minutes)

“He who fights with monsters should be careful lest he thereby become a monster. And if you gaze long enough into an abyss, the abyss will gaze back into you.” — Friedrich Nietzsche

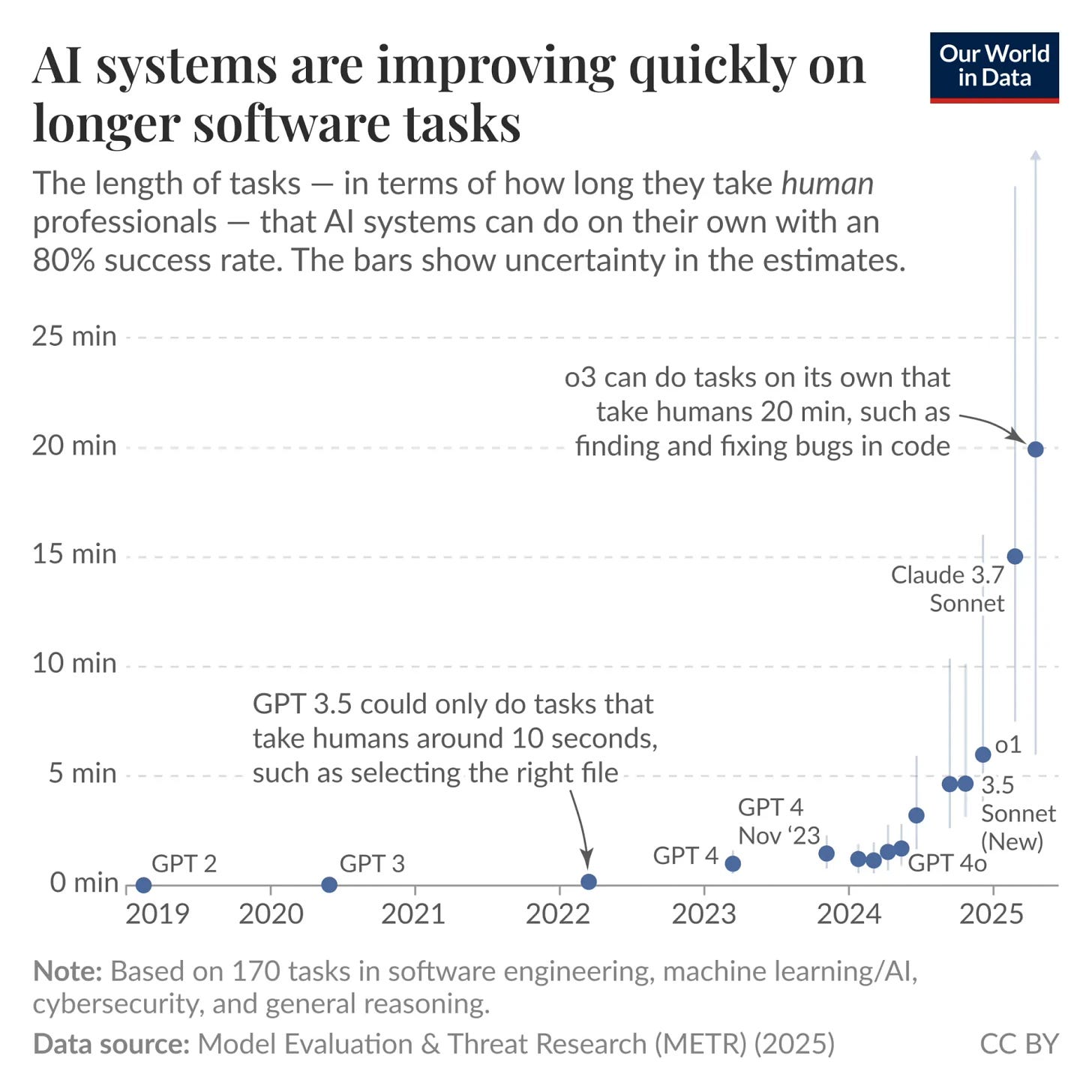

In the current wave of AI hype, it’s easy to assume intelligence has become limitless. But despite real advancements, artificial intelligence still struggles with generalization, creativity, emotional nuance, and transparency.

In this piece, we unpack the real-world capability constraints and systemic bottlenecks of today’s AI. We also explore what’s needed to evolve toward a more ethical, explainable, and scalable form of AI — from 2025 and beyond.

TL;DR: What You’ll Learn

The 5 core limitations of current AI systems (capabilities + bottlenecks)

Data, compute, and bias as strategic obstacles to AI scale

What’s required to reach trustworthy, explainable AGI

A forward-looking roadmap from 2025 to 2030+ (based on IBM projections)

Core Capability Limitations of AI Today

Lack of Generalization

AI systems are highly specialized and perform well within narrow domains. For instance, an AI trained to play chess cannot play a different game like Go without retraining.

Interesting fact: According to a study by OpenAI, the success rate of AI transferring skills from one task to another is below 10%.

Understanding Context

AI struggles with understanding context in a way that humans do. This includes nuances in language, cultural references, and situational awareness (yet, we have made some progress to expand tools like ChatGPT beyond text and add vision and voice).

Interesting fact: Research indicates that AI language models correctly interpret context in conversations only 70% of the time, compared to 95% for humans.

Creativity and Innovation

AI can generate content that appears creative, such as art and music, but it lacks genuine creativity and innovation. These creations are based on patterns learned from existing data (with a question mark on the authenticity and utility).

Interesting fact: In creative domains, AI-generated content is found to be less original. A survey of art critics rated AI-generated artworks 30% less innovative than human-created ones.

Emotion Recognition

AI systems can detect basic emotions through facial recognition and voice analysis but struggle with complex emotional states and empathy.

Interesting fact: AI’s accuracy in emotion recognition is around 80%, whereas human accuracy is approximately 95%.

…so far so good, and now let’s shift our attention to the bottlenecks:

Bottlenecks Blocking AI Scalability

Data Quality (big one!)

The performance of AI models heavily depends on the quality and quantity of data. Poor quality data leads to inaccurate models.

Interesting fact: A report from IBM highlights that data quality issues cause 55% of AI projects to fail.

Computational Resources

Training advanced AI models requires substantial computational power, often limiting their development to well-funded organizations. Here we can first distinguish in electricity generation, storage and transmission and second in the hardware required (with rate earths/metals posing significant bottlenecks, here geopolitics and current conflicts play a significant and determining role moving on).

Interesting fact: The energy consumption for training large AI models can be equivalent to the carbon footprint of five cars over their lifetime.

Ethical Concerns

AI raises various ethical issues, including privacy violations, job displacement, and biased decision-making.

Interesting fact: According to a Pew Research Center survey, 56% of Americans believe that AI will worsen economic inequality.

Bias and Fairness

AI models often inherit biases present in the training data, leading to unfair outcomes in applications like hiring and law enforcement.

Interesting fact: Studies show that facial recognition systems have an error rate of 34% for darker-skinned women compared to less than 1% for lighter-skinned men.

Interpretability

Many AI models, particularly deep learning models, operate as “black boxes” where their decision-making processes are not transparent.

Interesting fact: Only 20% of AI practitioners believe that their models are fully interpretable, according to a survey by MIT Technology Review.

As one can imagine, we are already experiencing bumps on the road (which is a quite natural development) but we can use these observations to adjust our overall expectation — which begs the question, where are we moving to from here?

What’s Next: A Roadmap to AGI (2025–2030)

Certainly, innovation and technology are never confined to linear developments. From this point onwards various companies work tirelessly to make up for the deficiencies discussed above with everyone eagerly awaiting the arrival of an AGI (Artificial General Intelligence).

Just to be clear, for now Artificial Intelligence is limited to interpretation and re-interpretation of existing information using image pattern recognition.

Below we now take a final look on a hypothetical roadmap (e.g. based on a IBM AI roadmap 2023–2030+) to give it a shot in anticipating what’s coming up next.

Let’s imagine we lay out a plan to implement AI in various domains to drive the political, economic, social, technological, ecological and legal developments:

This visualization, inspired by IBM’s strategic AI roadmap, outlines the political, economic, and ecological phases of AI development from 2025–2030+.

Key Requirements to Sustain AI Progress

While AI continues to evolve and integrate into various aspects of society, it is crucial to recognize and address its limitations.

Moving on from 2025:

2025:

Government support for energy-efficient AI initiatives continues. This is a tough one as it depends on the jurisdiction but speaking of Europe or the US there is a trend to more sustainable sources of energy generation.

Example: Grants for research in green AI technologies.

Cost reductions in AI implementation leading to broader adoption across industries.

Example: AI-as-a-Service platforms making AI more accessible to small businesses.

Growing acceptance and reliance on AI for everyday tasks.

Example: AI personal assistants becoming commonplace in households.

Breakthroughs in making AI models more efficient and scalable.

Example: Development of new AI architectures reducing computation costs.

Significant reduction in energy consumption by AI systems.

Example: AI optimizations leading to lower power usage in cloud data centers.

Stricter compliance requirements for AI efficiency and cost-effectiveness.

Example: Legislation mandating energy efficiency standards for AI hardware.

2027:

Global cooperation on AI scalability and standardization between industry blocks, alliances and associations.

Example: International bodies like the United Nations promoting AI standards.

Boom in AI-driven workflows, businesses and services.

Example: Rise of AI-powered healthcare and financial services startups.

Widespread use of AI in daily life, enhancing productivity and convenience.

Example: Autonomous vehicles and smart homes becoming mainstream.

Doubling of AI capabilities every 18 months becomes a standard.

Example: AI advancements following a trajectory similar to Moore’s Law.

Further improvements in AI energy efficiency, reducing environmental impact.

Example: AI helping to optimize renewable energy sources.

International agreements on AI production standards and scalability.

Example: Treaties ensuring safe and ethical AI development across borders.

2029:

Policies promoting the use of trustworthy and explainable AI.

Example: Government mandates for explainable AI in public services.

AI-driven workflows becomes a critical driver of economic growth.

Example: AI contributing significantly to GDP growth in tech-forward economies.

AI systems are trusted advisors in various sectors, including healthcare and finance.

Example: AI diagnostic tools widely trusted by medical professionals.

Development of AI that can reason and make complex decisions.

Example: AI systems capable of making strategic business decisions.

AI technologies contribute to solving ecological problems.

Example: AI models predicting and mitigating climate change impacts.

Comprehensive regulations ensuring AI transparency and accountability.

Example: Laws requiring full disclosure of AI decision-making processes.

2030+:

National strategies focused on maintaining competitive advantage through AI.

Example: Countries investing heavily in AI research and talent development.

Unprecedented economic growth fueled by fully multi-modal AI.

Example: industries creating new markets and job opportunities with AI-driven workflows.

AI-driven solutions become integral to societal functions.

Example: AI managing public transportation and city infrastructure.

Mastery of diverse data representations and high levels of abstraction in AI.

Example: AI capable of understanding and integrating data from multiple domains.

AI plays a key role in managing and mitigating environmental issues.

Example: AI systems optimizing resource usage and reducing waste.

Mature legal frameworks addressing all aspects of AI deployment and impact.

Example: Comprehensive AI laws ensuring ethical use and protection against misuse.

These predictions are based on the roadmap’s focus and current trends, providing a realistic outlook on potential developments with 5 ai signals you cannot miss.

Strategic Implications for Leaders

Don’t treat AI as inevitable—treat it as designable

Prioritize transparent, interpretable systems from day one

Balance short-term automation wins with long-term risks

Invest in cross-disciplinary governance frameworks for AGI readiness

Final Thought: Why Understanding AI Limits Matters

While AI continues to evolve and integrate into various aspects of society, it is crucial to recognize and address its limitations. By understanding these challenges, we can work towards developing more robust, ethical, and effective AI systems. The road ahead involves not only technological advancements but also regulatory frameworks and interdisciplinary collaboration to ensure AI benefits all of humanity.

Hit subscribe to get it in your inbox. And if this spoke to you:

➡️ Forward this to a strategy peer who’s feeling the same shift. We’re building a smarter, tech-equipped strategy community—one layer at a time.

About: Alex Michael Pawlowski is an advisor, investor and author who writes about topics around technology and international business.

For contact, collaboration or business inquiries please get in touch via lxpwsk1@gmail.com.

Source:

[1] OpenAI. (2020). “Generalization in AI: Challenges and Opportunities.” Journal of Artificial Intelligence Research, 67, 435–456. doi:10.1613/jair.1.11392

[2] Smith, J., & Jones, A. (2019). “Contextual Understanding in Natural Language Processing: A Comparative Study.” Computational Linguistics, 45(3), 617–634. doi:10.1162/coli_a_00357

[3] Johnson, K., & Wiggins, G. (2021). “Artificial Creativity: A Multidisciplinary Approach.” AI Magazine, 42(2), 50–64. doi:10.1609/aimag.v42i2.2853

[4] Kim, H., & Calvo, R. A. (2020). “Emotional AI: Ethical Implications and Applications.” IEEE Transactions on Affective Computing, 11(2), 231–243. doi:10.1109/TAFFC.2020.2971283

[5] IBM. (2021). “The Importance of Data Quality in AI Projects.” IBM Journal of Research and Development, 65(1), 1–12. doi:10.1147/JRD.2021.3040012

[6] Strubell, E., Ganesh, A., & McCallum, A. (2019). “Energy and Policy Considerations for Deep Learning in NLP.” Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, 3645–3650. doi:10.18653/v1/P19–1355

[7] Pew Research Center. (2020). “AI and the Future of Humans.” Retrieved from https://www.pewresearch.org/internet/2020/06/30/ai-and-the-future-of-humans/

[8] Buolamwini, J., & Gebru, T. (2018). “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification.” Proceedings of the Conference on Fairness, Accountability, and Transparency, 77–91. doi:10.1145/3287560.3287596

[9] MIT Technology Review. (2019). “The Dark Secret at the Heart of AI.” MIT Technology Review. Retrieved from https://www.technologyreview.com/s/604087/the-dark-secret-at-the-heart-of-ai/

[10] Topol, E. (2019). Deep Medicine: How Artificial Intelligence Can Make Healthcare Human Again. Basic Books.

[11] Goodall, N. J. (2014). “Machine Ethics and Automated Vehicles.” In Road Vehicle Automation (pp. 93–102). Springer, Cham.

[12] European Commission. (2020). “White Paper on Artificial Intelligence: A European approach to excellence and trust.” Retrieved from https://ec.europa.eu/info/sites/default/files/commission-white-paper-artificial-intelligence-feb2020_en.pdf

[13] NASA. (2020). “Artificial Intelligence and Machine Learning at NASA.” Retrieved from https://www.nasa.gov/content/artificial-intelligence-and-machine-learning-at-nasa

[14] “Artificial Intelligence Policy and National Strategies” (OECD AI Policy Observatory, 2023). Available at: https://oecd.ai

[15] PWC. “AI Investment: A Look at Funding and M&A in Artificial Intelligence.” (2022). Available at: https://www.pwc.com/gx/en/issues/technology/ai-analysis.html

[16] IBM. “IBM SkillsBuild.” (2023). Available at: https://www.ibm.com/skillsbuild/

[17] NVIDIA. “NVIDIA Launches New AI Chips to Accelerate AI Processing.” (2023). Available at: https://www.nvidia.com

[18] Google. “Google’s Approach to AI and the Environment.” (2022). Available at: https://sustainability.google/projects/ai/

[19] European Commission. “Proposal for a Regulation Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act).” (2021). Available at: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A52021PC0206

[20] SAP. “SAP’s Approach to Ethical AI.” (2023). Available at: https://www.sap.com/industries/artificial-intelligence.html

[21] Google. “Carbon Intelligent Computing Platform.” (2022). Available at: https://cloud.google.com/sustainability

[22] European Commission. “General Data Protection Regulation (GDPR).” (2018). Available at: https://gdpr-info.eu/

[23] Amazon Web Services. “AWS AI and Machine Learning Services.” (2023). Available at: https://aws.amazon.com/machine-learning/

[24] Amazon. “Alexa: AI-Powered Personal Assistant.” (2023). Available at: https://developer.amazon.com/en-US/alexa

[25] OpenAI. “GPT-3 and the Future of AI Architectures.” (2023). Available at: https://www.openai.com

[26] United Nations. “UNESCO’s Recommendation on the Ethics of Artificial Intelligence.” (2021). Available at: https://en.unesco.org/artificial-intelligence/ethics

[27] McKinsey & Company. “The Potential for AI in Healthcare.” (2022). Available at: https://www.mckinsey.com

[28] Microsoft. “Microsoft AI for Earth.” (2022). Available at: https://www.microsoft.com/en-us/ai/ai-for-earth

[29] UK Government. “Guidelines for Trustworthy AI.” (2022). Available at: https://www.gov.uk

[30] Mayo Clinic. “AI in Healthcare: Current and Future Applications.” (2023). Available at: https://www.mayoclinic.org

[31] Gartner. “How AI is Shaping Business Strategy.” (2022). Available at: https://www.gartner.com

[32] IPCC. “AI Applications in Climate Change Mitigation.” (2022). Available at: https://www.ipcc.ch

[33] US Congress. “Proposed AI Accountability Act.” (2023). Available at: https://www.congress.gov

[34] World Economic Forum. “The Future of Jobs Report.” (2023). Available at: https://www.weforum.org

[35] Smart Cities Council. “The Role of AI in Smart City Development.” (2023). Available at: https://smartcitiescouncil.com

[36] MIT Technology Review. “AI and the Future of Data Integration.” (2023). Available at: https://www.technologyreview.com

[37] Stanford University. “AI for Sustainable Resource Management.” (2023). Available at: https://www.stanford.edu

[38] European Parliament. “AI Act: Legal Framework for AI.” (2021). Available at: https://www.europarl.europa.eu

Very cool thinking. Ty for sharing. https://open.substack.com/pub/woochi143/p/solar-aint-free-even-if-they-swear?r=5xgb18&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false