Managing the New Workforce: Humans + Agents

#111: Leading in the Age of AI Agents: A Leadership Roadmap

Imagine this. It’s a couple of years from now. You apply for a new role. Your CV has been created and distributed by your personal career assistant agent. The first “recruiter” who reads it is also an AI, scanning specifically for your ability to lead hybrid teams of humans and AI agents.

How does that make you feel? Uneasy? Skeptical? Excited?

It doesn’t even sound like science fiction anymore. The path toward this reality is already visible. Organizations are moving from experimenting with AI assistants to embedding agents that perform entire workflows—and sometimes entire job functions.

This raises a deeper question: What does it mean to lead when your team includes not just people, but agents?

Where We Are Today and Where We’re Headed

Right now, the rhetoric of “AI colleagues” or “AI employees” is bubbling up in boardrooms. But in practice, today’s agents remain constrained.

They are designed to perform specific workflows under human oversight.

Oracle’s newly launched HR agents, for example, can schedule, process requests, or help with performance management—but they are not yet strategic HR partners.

Salesforce uses AI agents to call thousands of leads they couldn’t process with their human workforce alone, reporting that 50% of conversations are now handled by AI.

Microsoft forecasts a clear progression: agents as assistants → team members → process-owners capable of running full workflows with human oversight.

In this future, most professionals will become “agent bosses”, managing both humans and AI systems, setting boundaries, and intervening when needed.

The business case is strong: many organizations expect ROI from AI agents within just one year of adoption (Workday 2025).

The human case is equally pressing: while agents can relieve teams of repetitive, manual tasks, anxiety around job security and meaning is rising.

For example, Salesforce CEO Marc Benioff disclosed that AI agents replaced about 4,000 customer-support roles this year (Business Insider). This scale of change forces us to ask:

Whose jobs are safe?

How do careers evolve in this new landscape?

And for managers: Are we ready to lead through this?

A New Leadership Challenge

To lead in this future, managers will need to stretch their skills and mindsets. It’s not just about adopting new tools—it’s about navigating complexity, paradox, and human emotion.

Here’s a roadmap for the three stages of integrating AI agents into organizations:

I. Early Design

At this stage, managers focus on laying the groundwork for collaboration between humans and AI agents.

Architecting workflows

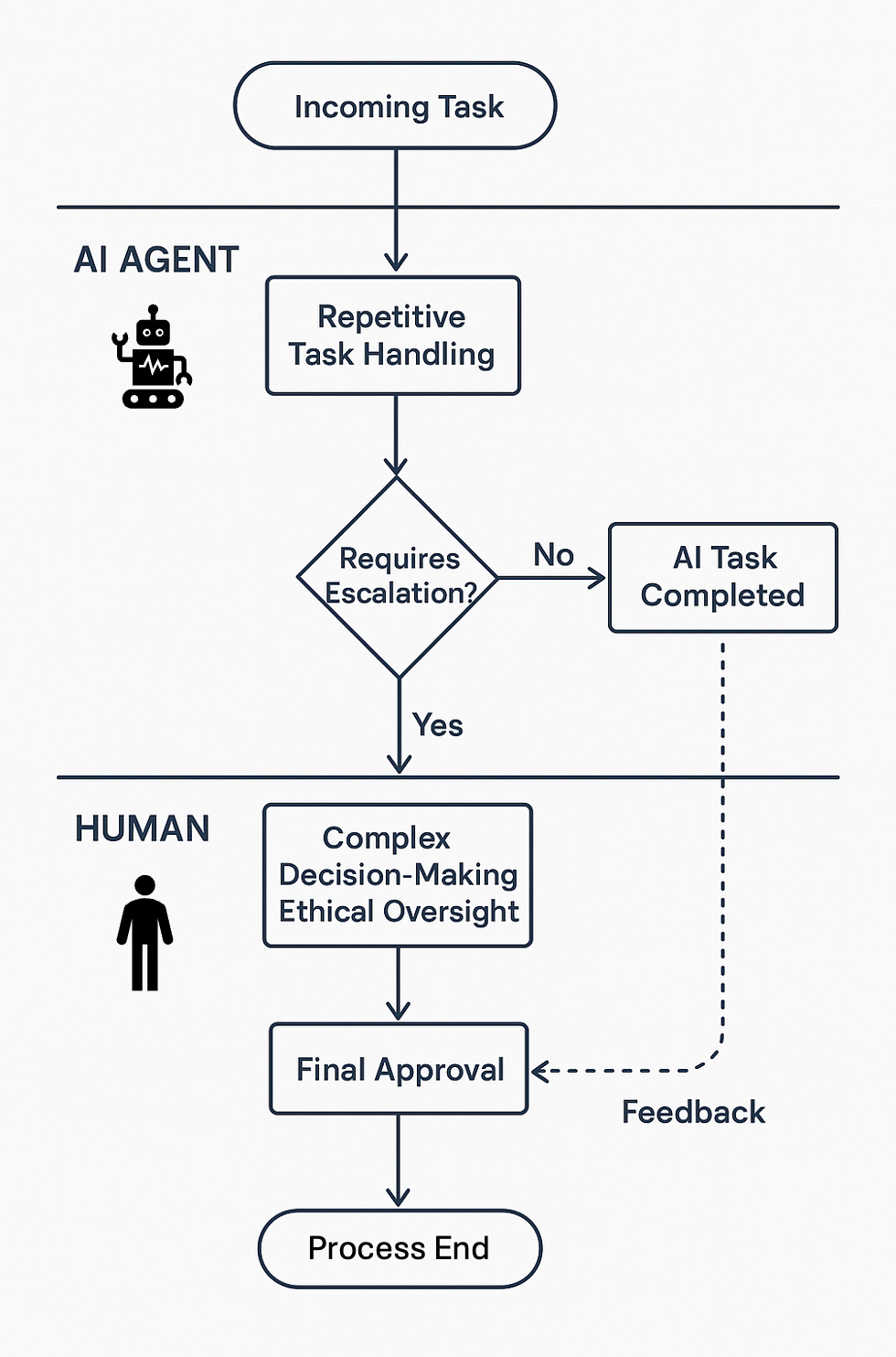

Managers will design how work flows between humans and agents, ensuring clear boundaries and decision-making authority.

Best practices:

Map workflows clearly, defining explicit hand-offs between humans and agents.

Start with pilot programs to test processes before scaling up.

Establish escalation paths for when AI systems reach their limits.

Involve diverse teams in workflow design to avoid blind spots.

Redefining metrics

Traditional KPIs won’t be enough. Leaders must rethink how to measure both agent and human performance and recognize shared outcomes.

Best practices:

Add collaboration KPIs, such as the effectiveness of human–agent interactions.

Track both agent accuracy/speed and human satisfaction and growth.

Continuously refine metrics as workflows evolve.

Ensure performance reviews fairly reflect human contributions in hybrid teams.

Emotional intelligence

Agents may not feel—but people do. Leaders must address anxieties about jobs, careers, and meaning.

Best practices:

Communicate openly about potential job impacts and career paths.

Provide reskilling opportunities to support workforce transitions.

Create regular forums for feedback and concerns.

Acknowledge and support the emotional toll of organizational change.

II. Adoption

Once agents are embedded, the focus shifts to oversight, learning, and trust-building.

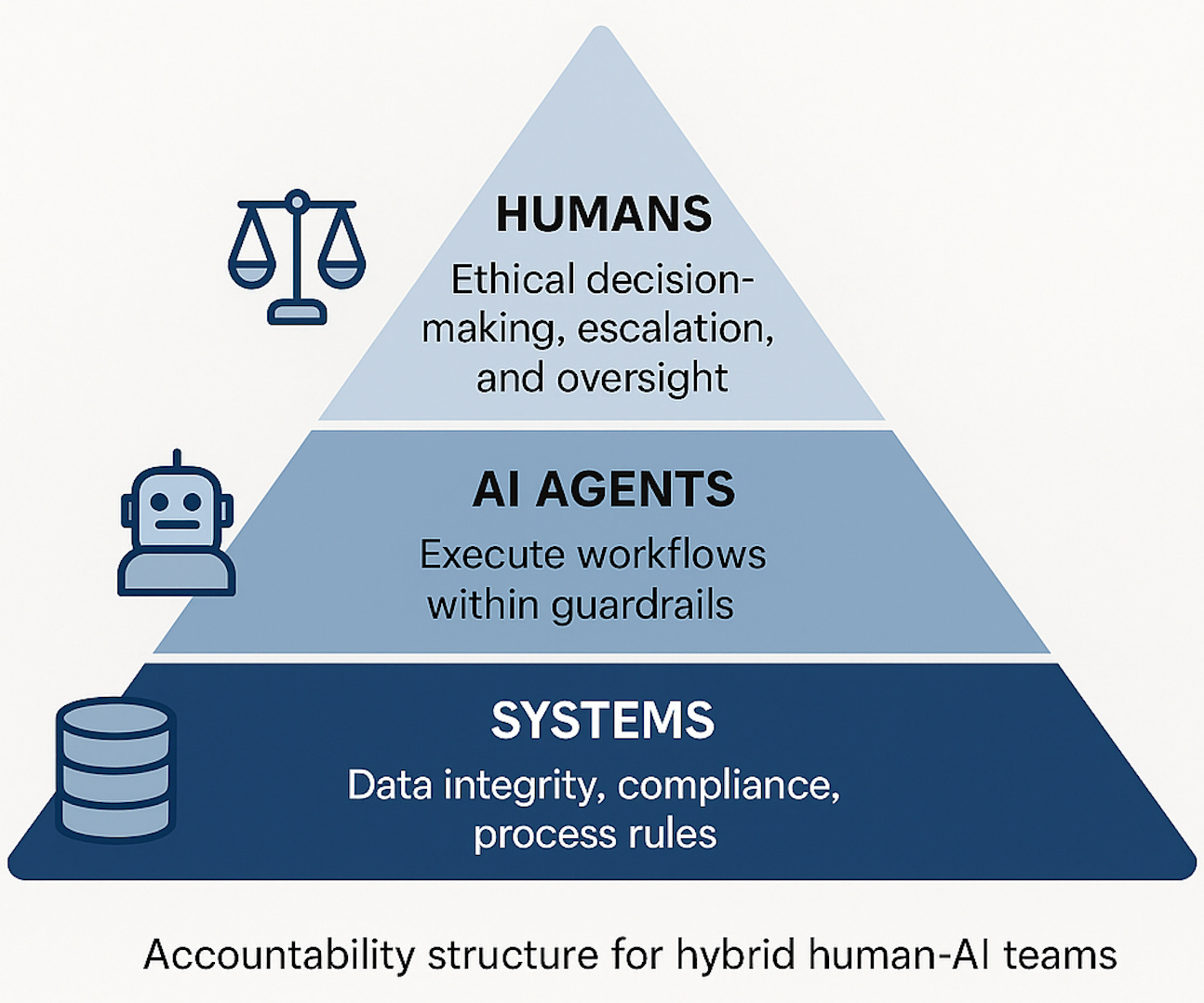

Oversight and accountability

Leaders must define guardrails: what agents can decide, what needs escalation, and how mistakes are handled.

Best practices:

Create clear responsibility matrices (RACI) for humans and agents.

Audit agent decisions regularly for compliance and fairness.

Design override protocols for human intervention when necessary.

Document decisions to establish accountability and transparency.

Judgment under uncertainty

Errors and ethical dilemmas will arise. Leaders need principled judgment to navigate ambiguity.

Best practices:

Establish cross-functional ethics committees to review complex cases.

Develop internal case studies to train teams on real-world dilemmas.

Train employees to recognize and mitigate AI bias or errors.

Encourage slow thinking for high-stakes decisions rather than rushing to action.

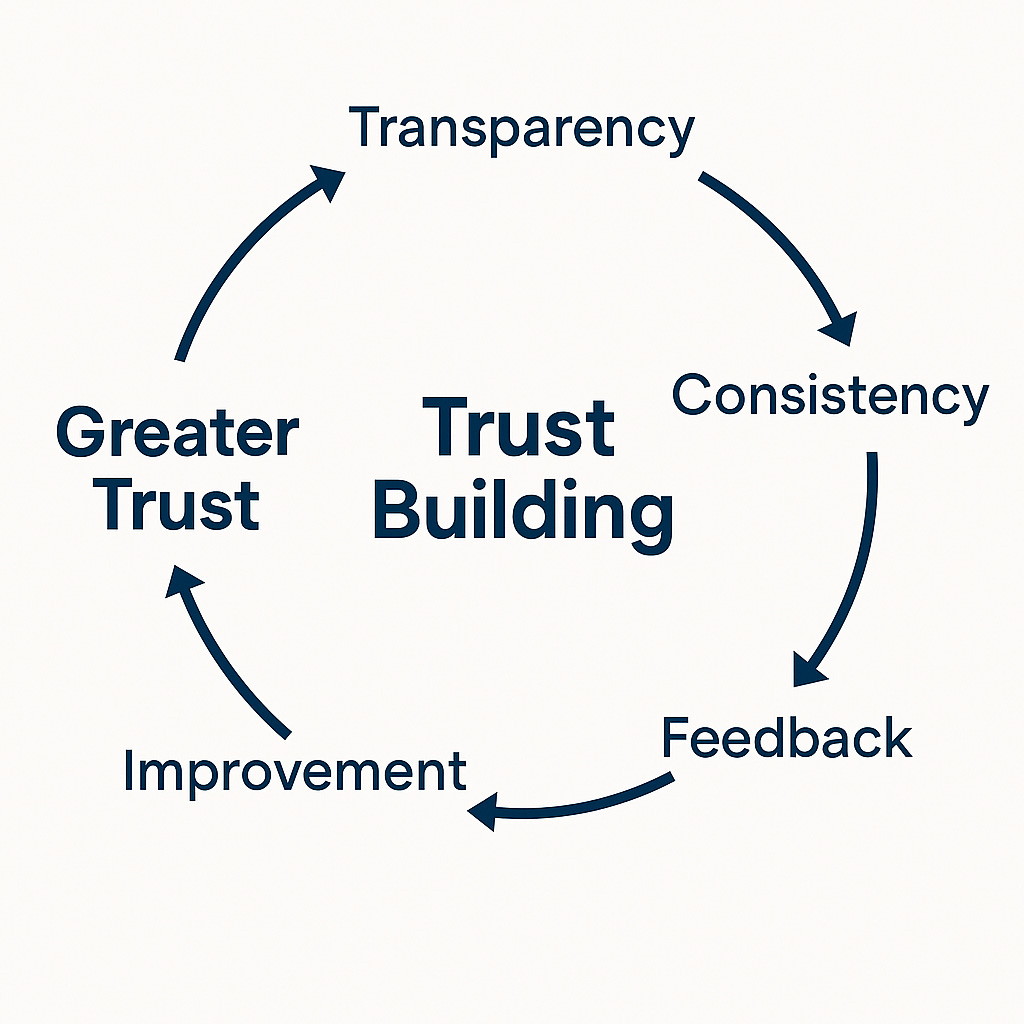

Trust building

Trust must extend to teams, systems, and leadership. Without it, adoption will stall.

Best practices:

Be transparent about how agent decisions are made and reviewed.

Share both successes and failures openly to create psychological safety.

Provide clear career paths for employees working in hybrid teams.

Ensure customers and external stakeholders understand how decisions are made.

III. Scaling

As organizations mature, the challenge shifts to creating breakthrough value and sustaining human engagement.

Thinking bigger

Beyond automation, leaders must focus on innovation and creativity.

Best practices:

Hold cross-team brainstorming sessions to identify transformative opportunities.

Allocate resources for innovation labs and experiments.

Reward calculated risk-taking that leads to strategic breakthroughs.

Use agents to free up humans for visionary, strategic work.

Meaning making

As agents take on repetitive tasks, leaders must actively safeguard meaning at work.

Best practices:

Regularly connect daily tasks to the company’s broader mission.

Build hybrid team rituals that celebrate human creativity.

Rotate employees into roles that emphasize strategy and innovation.

Create systems for recognizing non-quantifiable contributions like mentorship or innovation.

Developing human potential

The value of human work shifts toward creativity, relationships, and judgment.

Best practices:

Focus training on human-exclusive skills like empathy and complex problem-solving.

Build leadership pipelines for managing human–agent hybrid teams.

Provide continuous development to keep human skills relevant.

Recognize and reward relational and visionary contributions.

The Leadership Demands Ahead

The leaders who will thrive in this future are:

Resilient and adaptive, comfortable holding paradoxes: efficiency and ethics, human needs and technological demands.

Comfortable with dilemmas, able to act with imperfect information.

Fluent in dual contexts, seamlessly shifting between human relationships and technological oversight.

This transformation requires integration, not just optimization: blending left-brain logic and systems thinking with right-brain empathy and meaning-making.

Final Thoughts

As humans, we are wired to anthropomorphize. We call agents “colleagues” or even “employees.” But for clarity, we must resist that temptation: agents are not people.

People remain at the center of leadership because leadership is not just about efficiency—it’s about vision, empowerment, and inspiration.

As agents evolve, our task as leaders is simple but profound:

Manage systems and processes.

Lead people.

📚 References

Oracle. AI Agents Help HR Leaders Boost Workforce Productivity and Enhance Performance Management.

Agentic AI at Scale. Redefining Management for a Superhuman Workforce.

Business Insider Africa. Marc Benioff Says Salesforce Has Cut 4,000 Roles in Support Because of AI Agents.

The Guardian. Microsoft Says Everyone Will Be a Boss in the Future – of AI Employees.

World Economic Forum. Managing AI Agents: These Are the Core Skills We’ll Need.

Hit subscribe to get it in your inbox. And if this spoke to you:

➡️ Forward this to a strategy peer who’s feeling the same shift. We’re building a smarter, tech-equipped strategy community—one layer at a time.

Let’s stack it up.

A. Pawlowski | The Strategy Stack

I love this. This is very helpful and right on time, great visuals too. I am working to create my Agent/ Co-pilot. Is there a software that you recommend for this?

I appreciated the logic threading through your piece. It reminded me of how chess players train with machines: AI engines, coaching platforms, game databases, smart boards...all designed to simulate complexity until the player is ready to face a human opponent. That system works because the rules are fixed, the feedback is immediate, and the goal is precision.

But talent management isn’t chess. It’s not rule-bound; it’s relational, behavioral, and riddled with contradiction. I remain unconvinced that AI, no matter how refined the workflows, can resolve the behavioral shortcomings that plague HR. Kahneman’s Thinking, Fast and Slow came to mind as I read, because we’ve known for decades that HR decisions are shaped more by bias and cognitive drift than by process. And I’m not sure we’ll ever have the data architecture needed to redesign that.

Tech companies sell a rosy future, but I continue to see end-users misfire. AI is used to draft job descriptions that repel the very candidates they claim to attract. Conditionals and qualifiers are set by humans still operating inside bias systems, so the results remain structurally unsound. You’ve listed valuable best practices, and I agree they’re needed. But I never underestimate the depths of human ineffectiveness...especially when the system demands less cognition, less critical thinking, and less accountability.

AI doesn’t fix that. It amplifies it. Unless we redesign the architecture.

I look forward to learning more from the two of you.