The Reverse Turing Test: Can Humans Still Surprise AI?

#29: If algorithms predict our preferences before we do, can human creativity still catch AI off guard?

Picture this: You open Spotify and it queues up a playlist that mirrors your mood perfectly. You scroll through Instagram and see ads for things you were just thinking about. It feels like magic — or maybe a touch of mind-reading. But what if it’s just machine learning doing what it does best: predicting you?

This eerie accuracy raises an important and deeply human question: In a world where AI can anticipate our every click, move, or desire, what does authentic originality even look like anymore? And more importantly, can we still surprise the machines?

The Great Prediction Machine

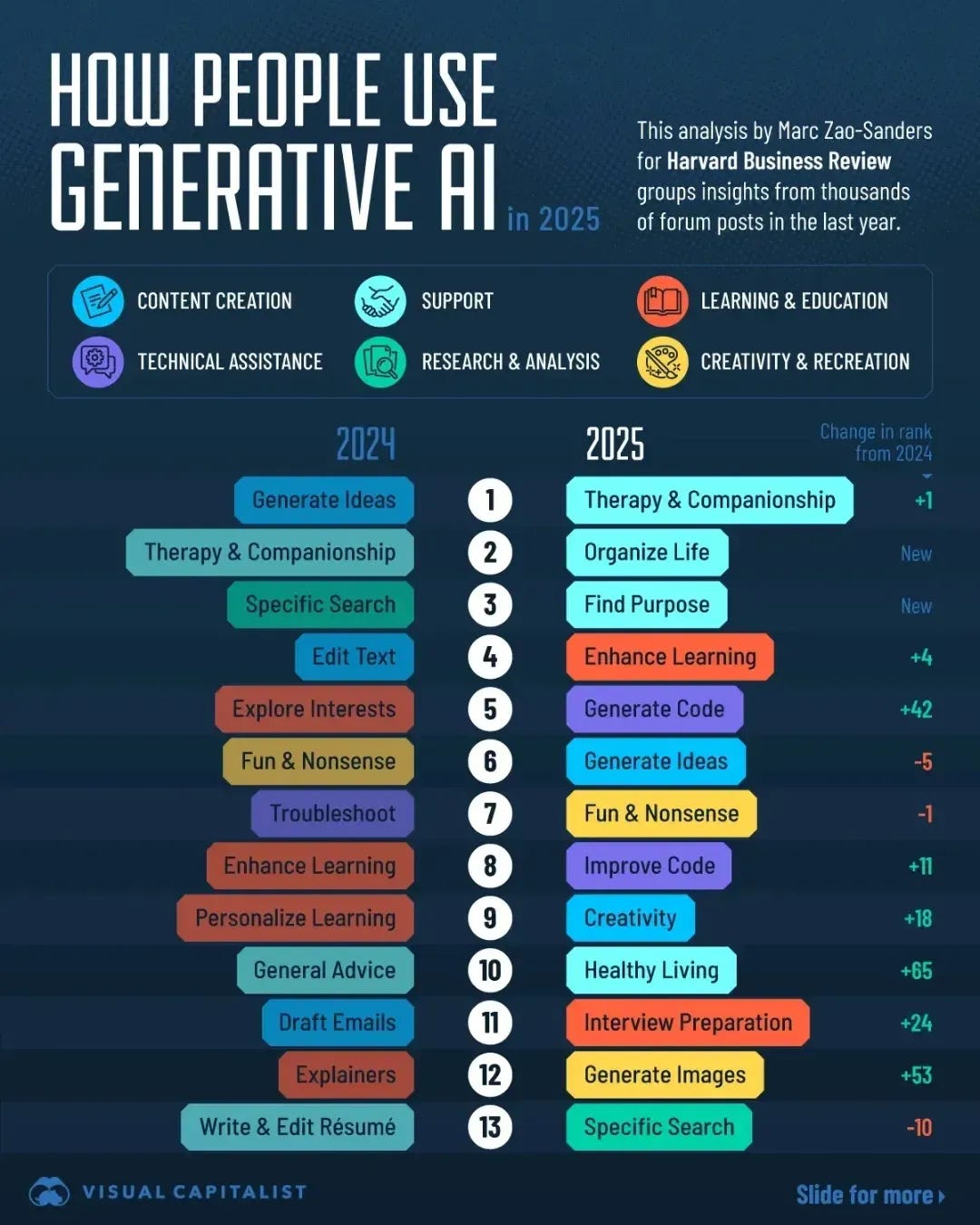

Artificial intelligence today is more than capable of anticipating our behaviors. Some models can predict human responses with up to 85% accuracy. In customer behavior forecasting, for example, machine learning algorithms help businesses tailor offers so precisely that conversions spike just because the customer feels seen.

It’s useful. It’s also unsettling.

Researchers at Cambridge have warned about how this level of prediction could be used to manipulate public decisions. Consider your Netflix queue — are you choosing what to watch, or is the algorithm nudging you?

The paradox of personalization is that it often narrows, rather than expands, our sense of agency. When our future actions become predictable, we risk becoming characters in someone else’s script.

Creativity: The Final Frontier?

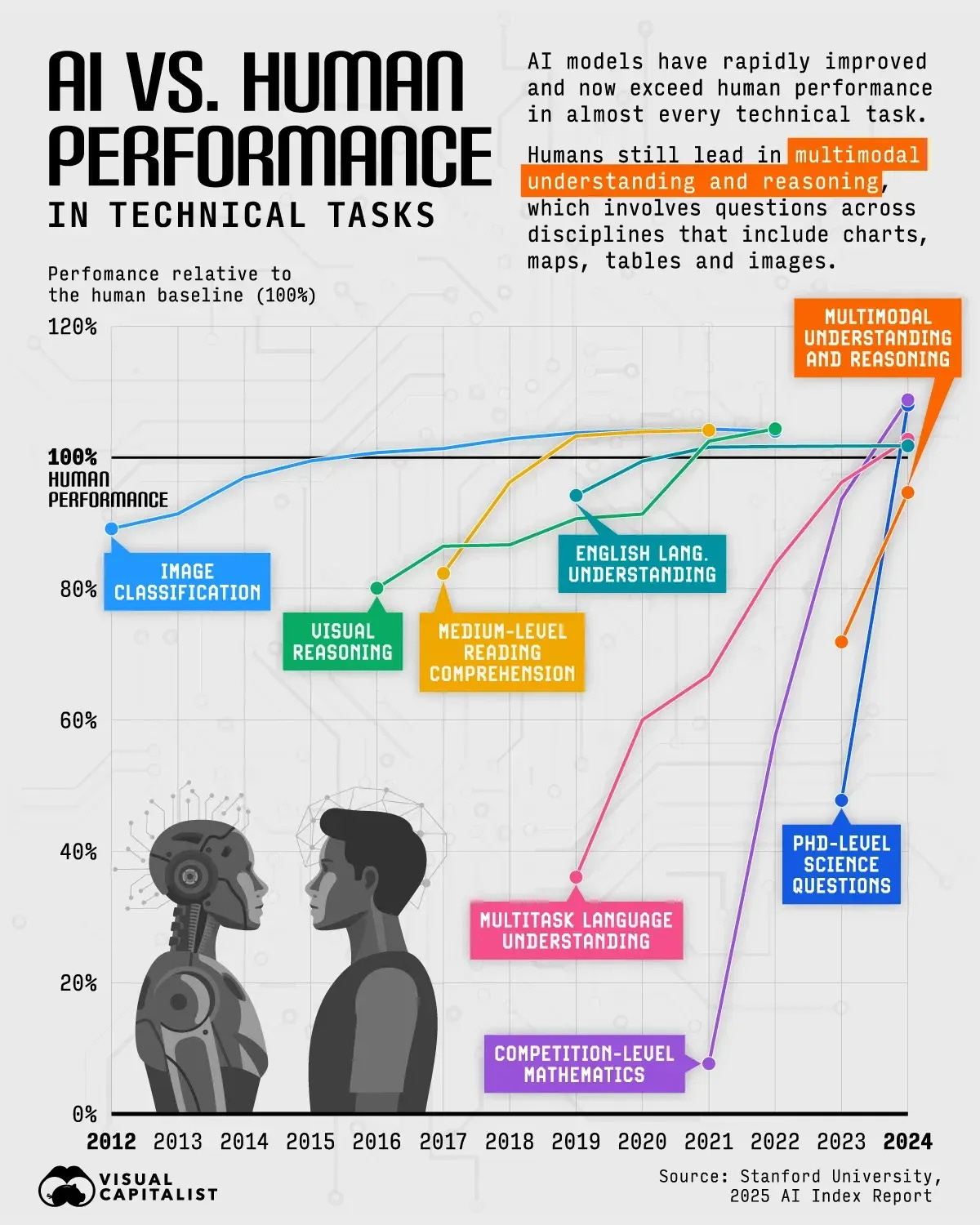

We often talk about creativity as the last bastion of humanity — our crown jewel in the AI age. But even here, the machines are creeping in.

A 2023 study published in Nature found that AI models outperformed the average human in creative tasks, like brainstorming alternative uses for a brick. These models, trained on billions of text samples, combine ideas in novel ways faster than most humans ever could.

But here’s the twist: AI can be original, but it’s rarely surprising. Creativity, at its best, is not just novelty. It’s about meaning, relevance, and emotional resonance. AI can produce a hundred variations of a poem in a minute, but it cannot write your poem, rooted in your lived experience, heartbreak, joy, and quirks.

Think of the viral poem “For Fran” written by an AI chatbot and misattributed to a human author. It was good — decent even — but it lacked that ineffable spark that makes words breathe.

Betting Against the Algorithm

Now, let’s switch lanes to prediction markets — those platforms where people bet on future events like elections, economic trends, or celebrity breakups.

You’d expect AI to own this space, right? Actually, humans still win.

The Good Judgment Project, for instance, used crowdsourced human predictions to outshine intelligence analysts. Their “superforecasters” — trained not in machine learning but in probabilistic reasoning and open-mindedness — consistently beat the odds. Why? Because they incorporate ambiguity, irrationality, and emotional subtext — areas where AI is still catching up.

This is the power of human messiness: we’re illogical, moody, and often contradictory. And that makes us harder to predict — and more valuable in a prediction-saturated world.

Under the Hood: The Technical Challenge of Surprising AI

To understand whether humans can still surprise AI, we must first understand what “surprise” means to a machine. In technical terms, surprise relates to entropy — the measure of unpredictability in a dataset. AI models, particularly those rooted in probabilistic or neural architectures, aim to minimize this uncertainty by finding the most likely outcome given prior data.

Modern AI systems like GPT or recommendation engines use transformer architectures, which analyze vast contexts to predict the next most probable token, click, or decision. The key strength of these models lies in pattern recognition — they thrive on repeated behavior, structured input, and dense datasets.

Here’s the catch: humans are pattern-breaking creatures. The very events that make us interesting — emotional pivots, creative risks, moral dilemmas — are often outliers in training data. These are sparsely represented and difficult to model. In fact, some cognitive phenomena like latent inhibition (ignoring familiar stimuli in favor of novel ones) are challenging to encode into any machine learning paradigm.

In areas like reinforcement learning, AI attempts to simulate trial-and-error decision-making. Yet it often lacks intrinsic motivation, a driver of human creativity and spontaneity. AI doesn’t “want” anything unless programmed to optimize for specific goals, and even then, it lacks the meta-cognition to reflect on its own limitations or desires.

Furthermore, multi-agent prediction systems, such as those used in high-frequency trading or adversarial networks, demonstrate how AI can be outmaneuvered when humans introduce chaotic inputs — signals that defy historical correlation or exploit unseen system weaknesses. This aligns with Gödel’s incompleteness theorems: in any sufficiently complex system, there are truths that can’t be derived from the system’s rules.

Ultimately, what separates human originality from algorithmic prediction isn’t just the output, but the intentionality behind it — a concept that still resists encoding.

The Psychology of Surprise

Here’s where psychology steps in. Our brains crave novelty. We tire of repetition. Algorithms serve us more of what we like, but our own preferences shift just to feel surprised again.

A fascinating 2023 study found that people overwhelmingly prefer human-created art over AI-generated art, especially when told who made it. The reason? We value effort. We sense depth. We respond not just to form, but to the story behind the form.

Even more telling, we’re attracted to what breaks patterns — not follows them. Think of how Memes or TikTok trends often work: something weird, off-beat, or unexpected catches fire, precisely because it doesn’t feel polished by an algorithm.

The Way Forward: Outsmarting the Machine

So how do we outmaneuver the machine? The answer isn’t to reject AI, but to use it as a mirror.

Inject randomness into your creative process. Flip routines. Collaborate with those outside your domain.

Seek dissonance. If AI suggests X, try Y or Z.

Lean into intuition. Your gut might be irrational — but that’s the point.

Let AI be the calculator, the synthesizer, even the partner. But don’t let it be the compass. Our job is to walk paths it can’t imagine — not because they’re efficient, but because they’re deeply, beautifully human.

In the end, the best reverse Turing test may be this: If the algorithm can’t quite figure you out, you’re probably doing something right.

Hit subscribe to get it in your inbox. And if this spoke to you:

➡️ Forward this to a strategy peer who’s feeling the same shift. We’re building a smarter, tech-equipped strategy community—one layer at a time.

About: Alex Michael Pawlowski is an advisor, investor and author who writes about topics around technology and international business.

For contact, collaboration or business inquiries please get in touch via lxpwsk1@gmail.com.

Source:

[1] Foo, M. (2023, September 4). AI predicts human behavior with 85% accuracy. LinkedIn. https://www.linkedin.com/pulse/ai-predicts-human-behavior-85-accuracy-maverick-foo-hphjc/

[2] Hern, A. (2024, December 30). AI tools may soon manipulate people’s online decision-making, say researchers. The Guardian. https://www.theguardian.com/technology/2024/dec/30/ai-tools-may-soon-manipulate-peoples-online-decision-making-say-researchers

[3] Beketayev, K., & Runco, M. A. (2023). Artificial intelligence outperforms the average human in a creative thinking test. Nature Scientific Reports. https://www.nature.com/articles/s41598-023-40858-3

[4] Wikipedia contributors. (2023). The Good Judgment Project. Wikipedia. https://en.wikipedia.org/wiki/The_Good_Judgment_Project

[5] Seidl, D. A., et al. (2023). People prefer human-created art over AI-generated art: The role of perceived effort and emotional depth. Cognitive Research: Principles and Implications, 8(1). https://cognitiveresearchjournal.springeropen.com/articles/10.1186/s41235-023-00499-6

Great article. I know a lot about AI because it is integrated in gambling softwares, specifically in electronic auto-roulettes and game shows. I know for a fact that in auto-roulettes, if you change consistently your gaming strategy patterns, AI cannot follow, quits understanding and anticipating your strategy.