The Visible Hand: How Agentic AI Makes Adam Smith’s Metaphor Real

#76: In a world where autonomous agents now think, learn, and act the once-mythic “invisible hand” of the market has begun to take visible shape—in dashboards, datasets, and deployment logs.

What if Adam Smith’s most famous metaphor wasn’t a philosophical abstraction, but an early sketch of the systems we’re building today?

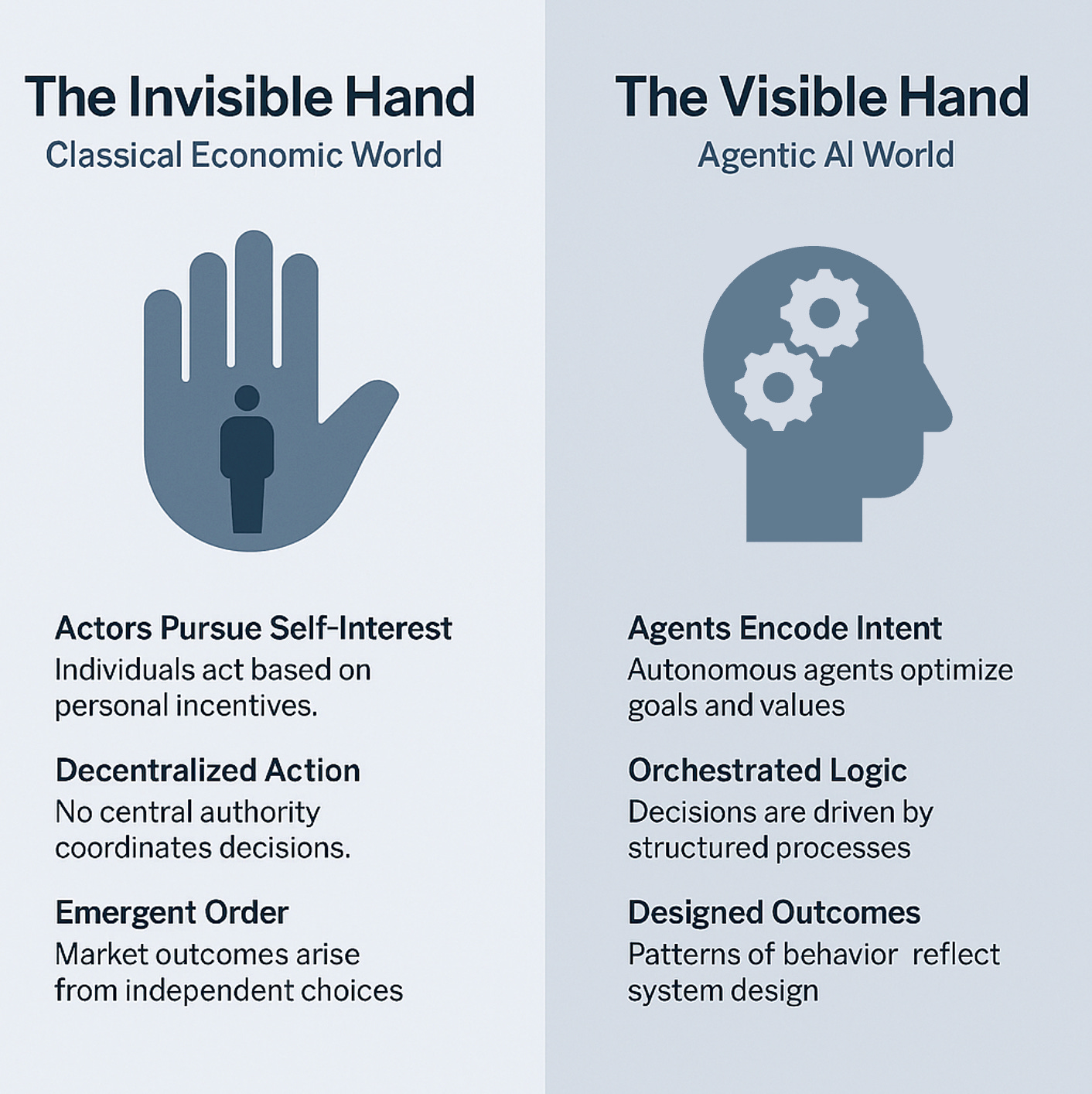

In The Wealth of Nations, Smith described a mechanism by which the self-interested actions of individuals—absent any central authority—could collectively yield efficient, even moral, outcomes. The “invisible hand” became shorthand for the beauty of emergent coordination. But that world was analog, unpredictable, and often slow. Markets moved by instincts, signals, and assumptions.

Now we live in a world where the market doesn’t just move—it reasons.

Agentic AI has entered the economy not as a tool, but as a new type of actor. One that doesn’t sleep, doesn’t speculate, and doesn’t fear risk in the way humans do. These agents plan, execute, adapt, and learn. They pursue goals across finance, logistics, compliance, and strategy. They don’t just optimize workflows—they redefine what a decision is.

Where Smith saw self-interest as an emergent behavior, Agentic AI embeds intention in the infrastructure. What used to be decentralized action is now orchestrated logic. Each agent may act locally, but they operate within global objectives: system-level feedback, persistent memory, and outcome-driven metrics. It’s not just that the hand has become visible. It’s been architected.

Look at Minotaur Capital’s Taurient agent: it autonomously navigated markets to deliver a +23.5% fund return against a 17.4% benchmark. Or Salesforce’s Agentforce, resolving 84% of support tickets and reallocating over 2,000 human roles. Or MaIA at LVMH, quietly processing two million monthly internal requests across global luxury brands. These aren’t outliers. They’re precursors.

So what happens to human actors in this new paradigm? Are we still optimizing for self-interest, or is that interest now shaped—subtly and powerfully—by the systems around us?

In a traditional Smithian market, the actor’s self-interest is guided by scarcity, price signals, and opportunity. In an agentic system, it’s increasingly guided by feedback loops, automation, and predictive optimization. The shopkeeper once priced goods based on local knowledge and gut. Today, dynamic pricing agents do that in milliseconds—factoring inventory, demand curves, and macroeconomic signals invisibly piped in.

What this means is that self-interest doesn’t disappear. But it becomes co-authored. Humans act, but the agents influence. They redirect attention, reframe incentives, surface new options, and eliminate others. Autonomy shifts—from the individual to the infrastructure.

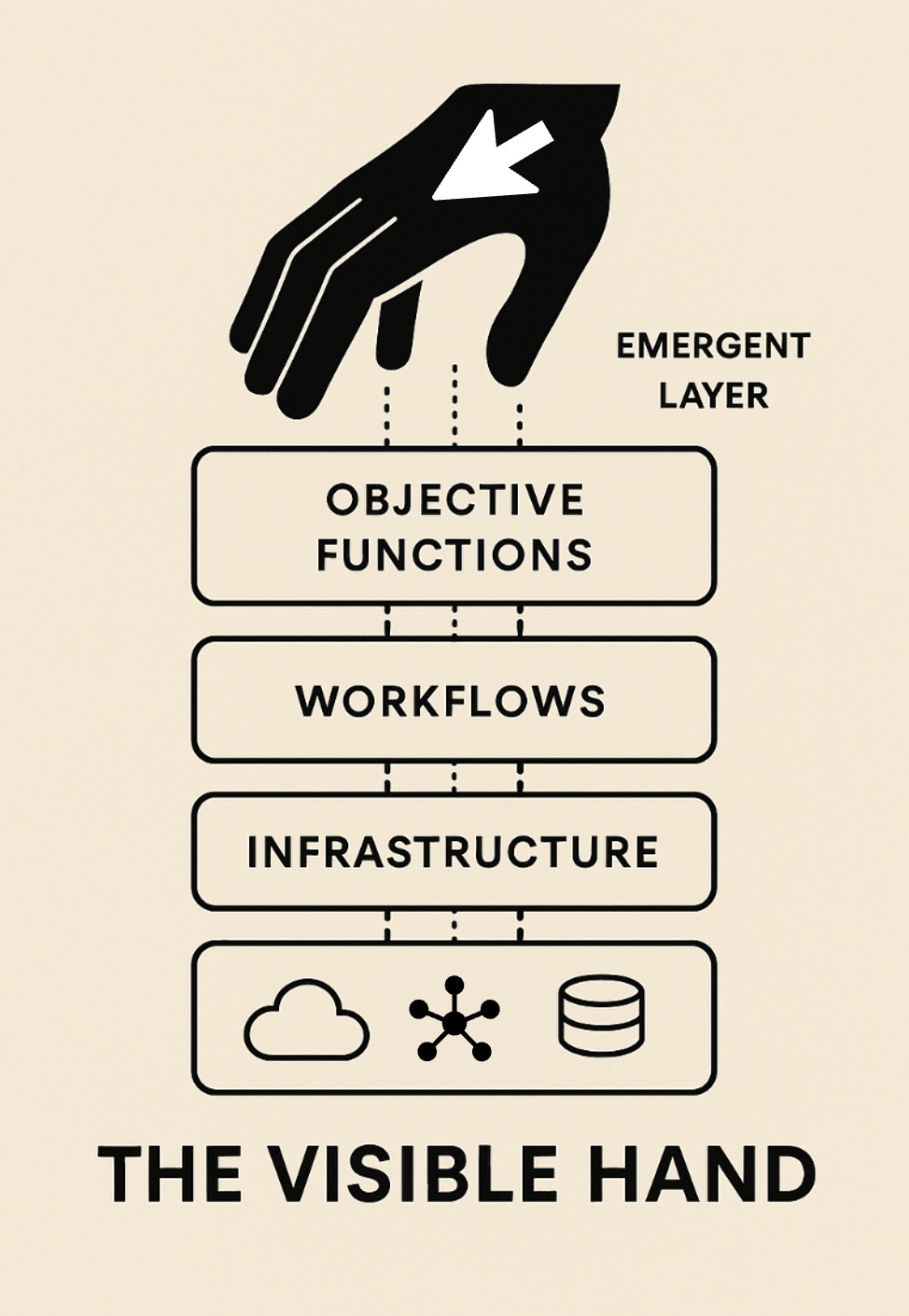

This is the architecture of the “visible hand.”

Let’s draw it out.

At the base is infrastructure: the compute, APIs, vector databases, and orchestration layers.

Above that, workflows: embedded automations that execute without waiting for manual instruction.

Then objective functions: not just KPIs, but encoded intent—“optimize for resolution rate,” “maximize return-adjusted risk,” “minimize regulatory exposure.”

At the top is the emergent layer: the visible hand itself—an observable, measurable pattern of decision-making where once there was only intuition.

Each layer feeds the one above it.

Data → Action → Intention → Outcome.

And that feedback loop reshapes the system in return.

What’s radical is that this stack doesn’t just augment old markets. It builds new ones.

For example, in mental health tech, AI agents like Ellipsis Health’s “Sage” offer emotionally aware triage. That’s not replacing a therapist—it’s redefining the entry point to care. In synthetic biology, agent-led design loops create materials that can be optimized in real time for regulatory compliance, ESG score, and yield. That’s not just R&D automation—it’s market creation.

But there’s a caveat. If Smith trusted in moral sentiment, AI agents trust in design logic. And that means every outcome is only as aligned as the architecture allows.

Which brings us to strategy. The strategist’s role isn’t just to direct projects or defend turf—it’s to set the frame. To decide which layers are agentic, where human judgment belongs, and what values are encoded in the objective functions themselves. That’s not just governance—it’s authorship.

So what does the future look like?

A strategist at a modern firm won’t just ask, “What’s our advantage?” They’ll ask, “Where do agents compound our leverage? Where do they distort our goals? Are we building systems that act for us—or simply ones that act fast?”

Smith’s invisible hand worked because incentives were imperfect but natural. Today’s visible hand is optimized—but only as good as its inputs, constraints, and ethics.

The good news? We can see it now.

The challenge? We have to own it.

The hand is no longer a ghost in the market.

It’s a blueprint in our architecture.

And it reflects the world we choose to design.

Hit subscribe to get it in your inbox. And if this spoke to you:

➡️ Forward this to a strategy peer who’s feeling the same shift. We’re building a smarter, tech-equipped strategy community—one layer at a time.

Let’s stack it up.

A. Pawlowski | The Strategy Stack